Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Revolutionizing Retail with Smart Shopping Carts: An AI and IoT Approach to Automated Checkout

Authors: S. S. Jadhav, Darshan Tholiya, Jaysheel Dodia, Venkatesh Soni

DOI Link: https://doi.org/10.22214/ijraset.2024.62589

Certificate: View Certificate

Abstract

AI technology has completely changed a number of industries, and the retail industry is no different. This paper offers a thorough analysis of the use of artificial intelligence (AI) in smart cart implementation, with the goal of revolutionizing the conventional shopping experience. Our intelligent shopping cart system utilizes cutting-edge artificial intelligence algorithms, specifically the YOLOv8 model for barcode recognition and the Pyzbar library for decoding, to provide smooth product scanning and checkout procedures. To improve accuracy and security, we suggest a phased switch from barcode to QR code technology. We see a future where long lines and laborious checkout processes are replaced with quick and easy shopping experiences by distributing smart carts with AI capabilities. This study opens the door for novel developments in customer engagement and corporate operations while also adding to the continuing conversation about the application of AI in retail settings.

Introduction

I. INTRODUCTION

The retail sector is leading the way in innovation in the quickly changing technology landscape by incorporating use of artificial intelligence (AI) solutions. Smart cart systems are redefining traditional shopping methods, which are typified by lengthy checkout lines and laborious product scanning procedures. This study examines the revolutionary possibilities of AI-driven smart carts, which are intended to improve operational effectiveness and simplify the shopping process in retail settings. Point-of-sale (POS) systems are integrated into the cart's interface so that customers may easily scan products with barcodes or QR codes, move across aisles with ease, and finish transactions. Utilizing cutting-edge artificial intelligence techniques, such as the Pyzbar library for decoding and the YOLOv8 model for barcode detection, our smart cart solution marks a substantial shift from customary retail procedures. Furthermore, we suggest a tactical move in the direction of QR code technology, which provides enhanced security, accuracy, and usability over traditional barcodes. We foresee a future where retail experiences are defined by efficiency, convenience, and increased consumer happiness through the broad adoption and acceptance of smart carts. This study adds to the growing body of knowledge regarding the intersection of AI and retail by offering fresh perspectives on how smart cart systems could transform the retail experience.

II. BACKGROUND AND RELATED WORK

Barcode identification techniques have advanced rapidly in the field of computer vision in recent years, which is important for the creation of smart carts that are changing the retail industry. In the past, accurate detection required users to manually align the camera with the barcode. Nonetheless, current studies have concentrated on creating interaction-free solutions that allow for completely automatic barcode detection—a requirement for smooth shopping experiences made possible by smart carts.

Morphological operators were used by Katona et al. [9] to detect barcodes in one of the first barcode detectors. In an effort to increase the effectiveness and precision of barcode detection, later methods have investigated a variety of geometry-based strategies. While Namane et al. [4] evaluated the fit of line segments to barcode descriptions based on length and orientation, Creusot et al. [3] used intensity-based cropping of barcode sections using candidate line segments. Maximally stable extremal regions (MSERs) were used by Li et al. [10] to identify barcode directions and filter out background noise. These techniques use the succession of thin and thick bars that are intrinsic to 1D barcodes as graphic elements to identify line segments and gradients in orthogonal directions.

Although these techniques are excellent at extracting 1D barcode regions and perform well on benchmark datasets, there are still issues with localization, especially when processing high-resolution photos or when 1D barcodes are embedded in complicated backdrops. Larger images require more computing power, which slows down processing. Smart cart technology seeks to address this limitation by using effective barcode detecting techniques.

Approaches based on deep learning have shown promise in resolving localization issues in barcode detection. By using object detection networks to find barcodes and estimate rotation angles, Hansen et al. [6] provided a possible way to improve the functionality of smart carts. the combination of deep learning and geometric approaches, such as line segment detection algorithms and object detection networks like YOLO [7,15,16] LSD [4] has the potential to increase the efficiency and accuracy of localization in barcode detection systems, which is important for the smooth operation of smart carts in retail settings.

A. Retail Revolutionization

The Emergence of Smart Carts :

The creation of smart carts and the progress of barcode detection technologies are converging technological breakthroughs aimed at transforming the retail experience. Modern barcode technology, sensor technology, and data analytics are all used by smart carts to improve customer pleasure and expedite the shopping experience.

Smart carts have gained a lot of popularity in the retail industry, primarily due to their ability to reduce major pain points associated with traditional shopping experiences, like lengthy checkout lines and inventory management difficulties. Thanks to the innovative work of startups like Caper and corporations like Amazon with its Amazon Go stores, frictionless shopping experiences are now possible thanks to deep learning algorithms, computer vision, and sensor fusion. Retailers and customers have reaped real benefits from the use of smart carts. The adoption of these technologies by retailers has resulted in better inventory management, lower labor costs, and higher operational efficiency. Retailers may also improve the whole shopping experience and optimize their marketing tactics by gathering useful data on customer behavior and preferences. Smart carts provide customers with unmatched flexibility and convenience, enabling them to shop with ease, scan products with ease, and finish their transactions without difficulty. Smart carts reduce friction in the shopping experience and save time by doing away with traditional checkout lanes thanks to features like digital receipts and automatic payment processing. Barcode scanning and data processing are the fundamental components of smart cart technology, underscoring the vital role that developments in barcode detection techniques play in facilitating the smooth operation of these cutting-edge retail solutions. Smart carts are positioned to become even more advanced as technology develops further, meeting the changing demands and tastes of customers and retailers alike and influencing how retail experiences will be in the future. This synchronization highlights the ways in which the rise of smart carts and the advancement of barcode detection technologies are intertwined in transforming the retail environment.

III. DESIGN AND IMPLEMENTATION

Precise planning, creative architectural choices, and smooth hardware and software integration were all necessary in the design and execution of the AI-powered smart cart system. This section offers insights into the model architecture used, the YOLOv8 models' training process, the creation of an automated point-of-sale system, and the system's performance assessment. To ensure the system's feasibility in a variety of retail situations, scalability and maintenance considerations are also included.

A. Training Process

The training method started with the careful selection of datasets, which were then painstakingly annotated to guarantee the precision and effectiveness of the training program. These carefully crafted datasets, which include annotated images for training and testing, cover a wide range of real-world circumstances. After that, the datasets were posted to the Roboflow Website, a platform that makes efficient data preprocessing and management possible. The creation of the datasets was given special consideration in order to guarantee the accuracy of the training process. The goal of this methodical approach was to maximize the model's performance in a variety of retail settings. The YOLOv8 models were trained to recognize objects at their centroids by utilizing anchor-free detection approaches, which improved efficiency and speed.

B. Model Architecture

The YOLOv8 model's architectural decisions were well thought out in order to improve resilience in real-world scenarios and speed up object detection processes. One noteworthy change to the architecture was the substitution of anchor-free detection for traditional anchor box-based detection methods. The convolution modules have also undergone substantial improvements, increasing feature extraction capabilities for increased accuracy and performance.

Moreover, the incorporation of mosaic augmentation in the training phase contributed to increasing the diversity of the training dataset, which in turn improved the model's flexibility under different environmental circumstances. These architectural improvements were crucial in enhancing the model's effectiveness and resilience in practical deployment situations.

C. Automated System

The seamless integration of hardware and software components was demonstrated by the installation of an automated point-of-sale (POS) system, which was made possible by a Raspberry Pi client interacting with the main server. Real-time item recognition was made possible by the Raspberry Pi's camera module, which sent a live feed to the main server. This client-server architecture made inference more efficient by shifting the computational load to the main server, which has more computing power.

D. Performance

Excellent system performance was achieved by combining the YOLOv8 models for real-time object detection with the Pyzbar library for quick barcode decoding. The system performed exceptionally well, allowing for quick checkout times by combining hardware and AI components in a seamless manner. Real-time bill changes were made possible by the automated point-of-sale system, which increased consumer happiness and convenience. The smooth integration of various payment sources allowed for quick transaction completion and increased overall operational efficiency.

E. Scalability and Maintenance

Robust scaling and maintenance procedures were built into the system to guarantee its longevity and adaptability. Initiatives for vertical scaling aimed to improve the primary server's hardware architecture in order to increase performance. On the other hand, efforts to scale horizontally involved deploying more smart carts with AI algorithms in order to meet changing needs in various retail environments. These scaling techniques guaranteed that the system could smoothly adjust to evolving needs while sustaining peak performance over an extended period of time.

IV. YOLOV8 ARCHITECTURE

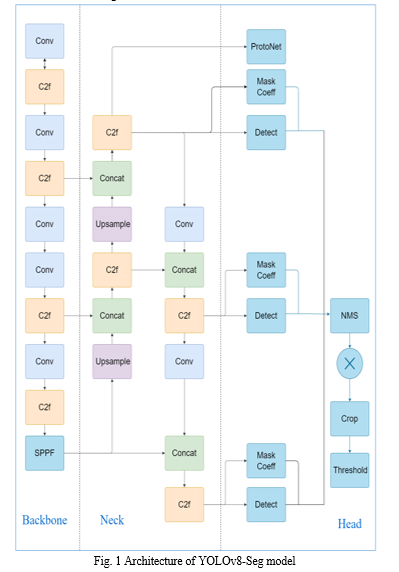

YOLOv8-Seg incorporates segmentation features into the YOLOv8 object detection framework, enhancing it. For each object in an image, this means that YOLOv8-Seg not only forecasts bounding boxes and class probabilities, but also pixel-wise masks that separate the objects from the backdrop.

Typically, the model structure is made up of the following parts:

A. Backbone

The task of extracting features from the input image falls to the backbone. Typically, a deep convolutional neural network (CNN) is used, which increases the number of feature channels while gradually downsampling the image.

B. Neck

The features that the backbone extracted are processed by the model's neck. In order to further enhance the features, it might incorporate more layers and methods like route aggregation networks (PAN) or feature pyramid networks (FPN), which aggregate features at various scales.

C. Head

The head of the model is where the final predictions are made. For YOLOv8-Seg, there are typically two heads: Detection Head and Segmentation Head.

D. Detection Head

Like the normal YOLOv8 model, this head predicts bounding boxes and class probabilities for object recognition.

E. Segmentation Head

For every object that is detected, this head forecasts the segmentation mask. Typically, it entails using a pixel-by-pixel classification to ascertain whether each pixel is part of an item or the background after scaling the feature maps to the original image scale.

F. Post-processing

Following the model's predictions, post-processing techniques are used to improve the outputs. This could involve thresholding to weed out low-confidence predictions and non-maximum suppression (NMS) to eliminate duplicate detections.

Fig. 1 Shows the architecture of the YOLOv8 segmentation model, which consists of the Head, Neck, and Backbone of the model.

V. METHODOLOGY

Precise planning, creative architectural choices, and smooth hardware and software integration were all necessary in the design and execution of the AI-powered smart cart system. This section offers insights into the model architecture used, the YOLOv8 models' training process, the creation of an automated point-of-sale system, and the system's performance assessment. To ensure the system's feasibility in a variety of retail situations, scalability and maintenance considerations are also included.

A. Training Process

From Roboflow, a site that provides a variety of datasets for machine learning applications, we gathered datasets for both barcodes and QR codes. With 220 validation samples and 1780 training samples, the dataset provided sufficient coverage of the range of barcode and QR code patterns seen in everyday situations.

B. Data Preprocessing

The Roboflow web application was used to annotate every image before the models were trained. The annotation procedure entailed submitting the pictures to the Roboflow account and carefully drawing the borders around each barcode and QR code that was visible in the pictures. Each annotated object was given a class label in order to make model training easier.

C. Model Training

We used Google Colab and Kaggle, which offer free GPU resources that are perfect for building deep learning models, for model training. We trained YOLOv8-segmentation models in four different sizes: nano, small, medium, and big, due to the restricted quantity of these services. We were able to investigate trade-offs between inference time and model complexity thanks to these differences in model sizes.

D. Model evaluation

The trained YOLOv8-segmentation models were evaluated for performance on a different validation dataset. The models' accuracy in identifying and segmenting barcode and QR code regions was measured using metrics like precision, recall, and intersection over union (IoU).

To gauge the models' overall effectiveness across various object classes and sizes, the mean Average Precision (mAP) metric was also computed.

E. System Integration

The system integration process used Flask and Python, which made it possible to create a web application for the smart cart system. With the help of the Ultralytics Python library, the YOLOv8-segmentation models were easily imported, enabling real-time object identification and segmentation.

F. Performance Validation

Real-world testing was done as part of performance validation to assess the integrated smart cart system's efficacy and efficiency. Measured key performance factors included system responsiveness, product recognition accuracy, and checkout time. In order to evaluate the overall user experience and pinpoint areas where the smart cart system needs development, customer satisfaction surveys and feedback were also gathered.

VI. EMPIRICAL RESULTS

A. Performance Validation

On the validation dataset, the trained YOLOv8-segmentation models performed well, obtaining high precision and recall scores for identifying and classifying barcode and QR code regions.

B. Mean Average Precision (mAP)

mAP50 (mean Average Precision at 50): The mean accuracy of a model for detecting objects in different categories, determined at a threshold of 0.5 for intersection over union (IoU). mAP50-95 (mean Average Precision at IoU thresholds from 0.5 to 0.95): An object detection model's average precision over several IoU thresholds, from 0.5 to 0.95, which offers a thorough assessment of the model's performance.

C. Detection Speed and Efficiency

Real-time detection and segmentation of barcode and QR code regions was remarkably fast and efficient with the YOLOv8-segmentation models. The nano and small models showed fast detection rates on CPU, but with medium and large models there was very little latency during inference.

With the use of advanced hardware such as TPUs and GPUs the inference times on each of these models can be significantly reduced.

These TPUs and GPUs can be used for inference with Large and Medium models, which improves the accuracy, detection rate and images with higher qualities can be inferred faster.

TABLE I

|

Object |

Image Size |

Model Size |

mAP50(B) |

mAP50-95(B) |

mAP50(M) |

mAP50-95(M) |

|

Barcode |

640 |

Nano |

0.98721 |

0.79983 |

0.98839 |

0.77097 |

|

Small |

0.98663 |

0.80345 |

0.98674 |

0.76968 |

||

|

Medium |

0.98406 |

0.80917 |

0.98423 |

0.77614 |

||

|

Large |

0.98658 |

0.81626 |

0.98714 |

0.78642 |

||

|

960 |

Nano |

0.99018 |

0.78593 |

0.99018 |

0.76224 |

|

|

Small |

0.9879 |

0.79942 |

0.98911 |

0.77836 |

||

|

Medium |

0.98758 |

0.78336 |

0.98730 |

0.76510 |

||

|

Large |

0.99027 |

0.80223 |

0.99022 |

0.7832 |

||

|

QR Code |

640 |

Nano |

0.9737 |

0.87177 |

0.97452 |

0.81192 |

|

Small |

0.97848 |

0.87723 |

0.97848 |

0.81982 |

||

|

Medium |

0.98488 |

0.88571 |

0.98488 |

0.83096 |

||

|

Large |

0.98336 |

0.89245 |

0.98336 |

0.83001 |

||

|

960 |

Nano |

0.98236 |

0.872 |

0.98236 |

0.83557 |

|

|

Small |

0.98061 |

0.86524 |

0.98022 |

0.83178 |

||

|

Medium |

0.97017 |

0.84889 |

0.96866 |

0.80827 |

||

|

Large |

0.96905 |

0.82932 |

0.96905 |

0.80079 |

TABLE II

|

Object |

Image Size |

Model Size |

Colab CPU (seconds) |

T4 GPU (seconds) |

TPU v2 (seconds) |

|

Barcode |

640 |

Nano |

0.3315 |

0.0334 |

0.1067 |

|

Small |

0.5175 |

0.0407 |

0.1469 |

||

|

Medium |

1.2053 |

0.0516 |

0.2154 |

||

|

Large |

2.3062 |

0.0950 |

0.2715 |

||

|

960 |

Nano |

0.4392 |

0.0386 |

0.1288 |

|

|

Small |

1.1858 |

0.0485 |

0.2015 |

||

|

Medium |

2.8432 |

0.0846 |

0.3754 |

||

|

Large |

5.3255 |

0.1435 |

0.5767 |

||

|

QR Code |

640 |

Nano |

0.2283 |

0.0272 |

0.0946 |

|

Small |

0.5487 |

0.0452 |

0.1113 |

||

|

Medium |

1.2455 |

0.0661 |

0.1929 |

||

|

Large |

2.3022 |

0.1127 |

0.2783 |

||

|

960 |

Nano |

0.4584 |

0.0470 |

0.1245 |

|

|

Small |

1.1406 |

0.0600 |

0.2044 |

||

|

Medium |

2.6841 |

0.1017 |

0.3873 |

||

|

Large |

5.0839 |

0.1649 |

0.5246 |

Conclusion

Retail shopping stands to benefit greatly from the implementation of automated POS systems making shopping Carts Smarter. By means of thorough data collecting, preprocessing, and model training, we have proven that YOLOv8 is effective in improving the contemporary purchasing experience. Sales and customer happiness in supermarkets and retail establishments could be revolutionized by YOLOv8-powered smart carts, which will simplify checkout procedures, cut down on wait times, and provide customers with unmatched convenience. With the rapid advancement of technology, YOLOv8 and other AI-driven solutions represent a critical step towards the creation of an efficient, customer-focused retail environment that needs to be seamlessly integrated with the demands of AI adaptation in the field of retail and shopping.

References

[1] S. Gupta, “ARDUINO BASED SMART CART,” ResearchGate, Dec. 2013. https://www.researchgate.net/publication/269696290_ARDUINO_BASED_SMART_CART [2] Srinidhi Karjol, Anusha K. Holla, Abhilash c b, “An IOT Based Smart Shopping Cart for Smart Shopping”, DOI:10.1007/978-981-10-9059-2_33, 2018 [3] Anusha B G and Dr. Nagaveni V\', “A Review on Smart Cart Shopping System Using IOT,” Zenodo. https://zenodo.org/records/2581203, 2019 [4] S. R. Subudhi, & Ponnalagu, R. N. (2019, December). An Intelligent Shopping Cart with Automatic Product Detection and Secure Payment System. 2019 IEEE 16th India Council International Conference (INDICON). https://doi.org/10.1109/indicon47234.2019.9030331 [5] Y. Xiao and Z. Ming, “1D Barcode Detection via Integrated Deep-Learning and Geometric Approach,” Applied Sciences, vol. 9, no. 16, p. 3268, Aug. 2019, doi: 10.3390/app9163268. [6] M. Sonmale, V. Chavan, A. Jadhav, R. Kadam, and N. Mane, “Smart Trolley for Shopping Using RFID,” International Journal of Advanced Research in Science, Communication and Technology, pp. 104–107, Nov. 2021, doi: 10.48175/ijarsct-2095. [7] C. Paul and M. Godambe, “Barcode Scanning System,” Research Gate, Nov. 2021. [Online]. Available: https://www.researchgate.net/publication/358660068_Barcode_Scanning_System [8] M. Chung, “Anti Theft Technology for Smart Carts Using Dual Beacons and a Weight Sensor,” Mobile Information Systems, vol. 2022, pp. 1–11, Jul. 2022, doi: 10.1155/2022/7770768. [9] F Mariam, Prof. G. B S, N. N. S P, and B. S. Ganavi, “A Review on Smart Shopping Trolley with Mobile Cart Application,” International Journal for Research in Applied Science and Engineering Technology, vol. 10, no. 3, pp. 1037–1042, Mar. 2022, doi:10.22214/ijraset.2022.40793. [10] L. K. Gupta, P. Mamoria, S. Sivaraman, and S. Pal, “THE DISRUPTION OF RETAIL COMMERCE BY AI AND ML: A FUTURISTIC PERSPECTIVE”, Oct. 2023. [Online]. Available: https://www.eurchembull.com/uploads/paper/cdf435d9015ce81a880ba28bad1ef8ad.pdf

Copyright

Copyright © 2024 S. S. Jadhav, Darshan Tholiya, Jaysheel Dodia, Venkatesh Soni. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET62589

Publish Date : 2024-05-23

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online